Implementation follows design. Finally I can sling some code ;-) But where to start? Analysis and design left me with some options. There is not just an Interpolate() method like Robert C. Martin had, but several classes and methods.

On the surface of the system under development there are:

TerrainGenerator.Interpolate()Terrain.SizeTerrain.this[]Terrain.ToArray()

And below the surface I found:

ShapeHierarchy.ShapesCalculator.Process

Interpolate() will be simple to do once all other modules are coded. It’s the integrating function, it represents the whole of the algorithm to implement. I’ll do it last.

Implementing Terrain

The analysis already provided some test for Interpolate(). So since Terrain does not depend on other classes, it shall be the first module to code.

The tests are straightforward. I write them both down:

[TestFixture]

public class test_Terrain {

[Test]

public void Terrains_are_squares_of_size_2n1() {

var t = new Terrain(1);

var m = t.ToArray();

Assert.IsTrue(m.GetLength(0) == m.GetLength(1));

Assert.AreEqual(t.Size, m.GetLength(0));

var size = new Terrain(3).Size;

Assert.AreEqual(9, size);

}

[Test]

public void Terrains_are_initialized() {

var t = new Terrain(3, 1.0f, 2.0f, 3.0f, 4.0f);

Assert.AreEqual(1.0f, t[0, 0]);

Assert.AreEqual(2.0f, t[0, t.Size-1]);

Assert.AreEqual(3.0f, t[t.Size-1, t.Size-1]);

Assert.AreEqual(4.0f, t[t.Size-1, 0]);

}

}

No stepwise testing development needed as TDD advocates, because the implementation is trivial:

public class Terrain {

private float[,] matrix;

public Terrain(int n) {

this.N = n;

var size = (int)Math.Pow(2,n) + 1;

matrix = new float[size, size];

}

public Terrain(int n, float leftTop, float rightTop, float rightBottom, float leftBottom) : this(n) {

matrix[0, 0] = leftTop;

matrix[0, Size - 1] = rightTop;

matrix[Size - 1, Size - 1] = rightBottom;

matrix[Size - 1, 0] = leftBottom;

}

public int N { get; }

public int Size => matrix.GetLength(0);

public float this[int y, int x] {

get { return matrix[y, x]; }

set { matrix[y, x] = value; }

}

public float[,] ToArray() => matrix;

}

Terrain is almost a pure data structure, very little logic is needed. The interesting stuff is one line to calculate the size of the square and a couple of lines to initialize the vertexes.

[the_ad_group id=“15″]

Implementing Calculator

Calculator seems to be the next easiest class. Just one public method (although a user of the solution won’t see it).

But Calculator.Process() consist of two aspects: averaging and jitter. I take a TDD approach and start with just calculating an average:

[Test]

public void No_jitter() {

var sut = new Calculator(0, 0, () => 0.0f);

Assert.AreEqual(2.0f, sut.Process(new[] { 1.0f, 2.0f, 3.0f }));

Assert.AreEqual(2.5f, sut.Process(new[] { 1.0f, 2.0f, 3.0f, 4.0f }));

}

The implementation is trivial using C# Linq:

class Calculator {

readonly float offset;

readonly float amplitude;

readonly Func<float> randomValue;

public Calculator(float offset, float amplitude, Func<float> randomValue) {

this.offset = offset;

this.amplitude = amplitude;

this.randomValue = randomValue;

}

public float Process(float[] values) {

return values.Average();

}

}

Now on to adding some randomness:

[Test]

public void With_jitter() {

var sut = new Calculator(6, 2, () => 1.0f);

Assert.AreEqual(8.0f, sut.Process(new[] { 0.0f }));

sut = new Calculator(6, 2, () => -1.0f);

Assert.AreEqual(4.0f, sut.Process(new[] { 0.0f }));

}

Since the average does not need to be checked again I just pass in 0.0f as a single value. That way the random value (based on offset and amplitude) will be the result of Process().

Using 1.0f and -1.0f as „random“ values tells me if the amplitude is able to increase and decrease the offset:

public float Process(float[] values) {

var result = values.Average();

return result + this.offset + this.amplitude * this.randomValue();

}

I could have put adding the jitter into a method of its own. But since Process() is only two lines in total and focussing on the jitter by choosing certain input values is very easy, I chose not to add another module (yet).

Implementing ShapeHierarchy

Implementing the shape hierarchy is the most challenging part of the whole thing. It’s the heart of the algorithm. I’ve to be careful to get it right. Small steps seem prudent.

First two „acceptance tests“, i.e. tests of the overall functionality. Once they become green I deem the implementation of ShapeHierarchy.Shapes done.

I need to check if shapes of the correct number and with the correct center and vertexes get produced for certain sizes. It’s not important if those shapes represent diamonds or squares. Averages are calculated just from vertex values on a terrain.

[Test]

public void Size3() {

var sut = new ShapeHierarchy(1);

var shapes = sut.Shapes.ToArray();

Assert.AreEqual(5, shapes.Length);

Assert.AreEqual("[1,1]@([0,0],[0,2],[2,2],[2,0])", shapes[0].ToString());

Assert.AreEqual("[0,1]@([0,2],[1,1],[0,0])", shapes[1].ToString());

Assert.AreEqual("[2,1]@([1,1],[2,2],[2,0])", shapes[2].ToString());

Assert.AreEqual("[1,0]@([0,0],[1,1],[2,0])", shapes[3].ToString());

Assert.AreEqual("[1,2]@([0,2],[2,2],[1,1])", shapes[4].ToString());

}

[Test]

public void Size5() {

var sut = new ShapeHierarchy(2);

var shapes = sut.Shapes.ToArray();

Assert.AreEqual(21, shapes.Length);

Assert.AreEqual("[2,2]@([0,0],[0,4],[4,4],[4,0])", shapes[0].ToString());

Assert.AreEqual("[2,4]@([0,4],[4,4],[2,2])", shapes[4].ToString());

Assert.AreEqual("[1,1]@([0,0],[0,2],[2,2],[2,0])", shapes[5].ToString());

Assert.AreEqual("[0,1]@([0,2],[1,1],[0,0])", shapes[9].ToString());

}

To make comparison easy I use the ToString() function of the Shape and Coordinate data structures to get shapes serialized. That way I just need to check string equality and don’t need to implement Equal() methods ;-) An added benefit: whenever something should go wrong with shapes and I need to debug, the IDE will readily show me shape contents as nicely formatted strings.

Of course I need to do the calculation of the expected shape coordinates by hand :-( But the acceptance tests I described earlier help:

I encoded the level of the shapes in their cell color (level 1: dark blue, light blue, level 2: dark green, light green) and chose a certain order for their calculation (squares will start with the north-west vertex, diamonds with the north vertex).

But what now? How to proceed with the implementation?

Some more design

I don’t like to drive an implementation just through the bottleneck of a single function. That might be ok for some very small functions like Calculator.Process(). But this here is more complicated.

Instead I insert another small design phase. Let me see if I can derive some more structure from the requirements for this specific method.

From what I can see in the specs there are squares and diamonds on each level. For a 3×3 terrain it’s one square and 4 diamonds on just one level where their radius is 1 (2^(n-1)). For a 5×5 terrain it’s one square and 4 diamonds on the first level with radius 2, and then 4 squares and 12 diamonds on a second level with radius 1.

There are levels with decreasing radius starting from 2^(n-1) each containing squares and diamonds which are independent of each other. Instead of a big problem there are now two small problems – and the big problem repeated on a lower level. Recursion to the rescue!

What I need are 3 functions:

- Creating all shapes on a certain level with the appropriate radius – and in the end calling itself again for the next lower level.

- Creating all squares with a certain radius.

- Creating all diamonds with a certain radius.

The recursive function will be called by the Shapes property. And it’s easy to do: just concat the streams of squares and diamonds on one level with the stream of shapes from the next lower level.

public IEnumerable<Shape> Shapes { get {

var size = (int)Math.Pow(2.0, this.n) + 1;

var r = (int)Math.Pow(2.0, (double)(n - 1));

return Enumerate_shapes(size, r);

}

}

IEnumerable<Shape> Enumerate_shapes(int size, int r) {

if (r < 1) return new Shape[0];

return Enumerate_squares(size, r)

.Concat(Enumerate_diamonds(size, r))

.Concat(Enumerate_shapes(size, r / 2)); // recursion

}

There are tests for Shapes, but no tests for Enumerate_shapes() are needed. It’s a small plain integration function. I’ll know from the tests on Shapes if it’s correctly wiring together its functions.

The real workhorses are IEnumerable<Shape> Enumerate_squares(int size, int r)and IEnumerable<Shape> Enumerate_diamonds(int size, int r). Both take a radius r to generate shapes of the correct size for the level, and the size of the overall terrain to not include coordinates beyond its boundaries.

This is no rocket science. I really just got that from carefully studying the specs. The images there are telling this story. (And don’t get confused with „diamond step“ and „square step“. The diamond step actually calculates the center value of a square, and the square step calculates the center values of diamonds. At least that’s what I see in those images.)

Implementing private functions

The newly found shape enumeration functions are small, but a little bit tricky. I need to get the center point calculation right. It’s simple for the only square on the first level:

[Test]

public void Squares_size3_radius1() {

var squares = ShapeHierarchy.Enumerate_squares(3, 1).ToArray();

Assert.AreEqual(1, squares.Length);

Assert.AreEqual("[1,1]@([0,0],[0,2],[2,2],[2,0])", squares[0].ToString());

}

But how about the 16 squares on the third level of a 9×9 terrain? It’s too many for me to check all of them. So I’m doing just a spot check:

[Test]

public void Squares_size9_radius1()

{

var squares = ShapeHierarchy.Enumerate_squares(9, 1).ToArray();

Assert.AreEqual(16, squares.Length);

Assert.AreEqual("[1,1]@([0,0],[0,2],[2,2],[2,0])", squares[0].ToString());

Assert.AreEqual("[5,7]@([4,6],[4,8],[6,8],[6,6])", squares[11].ToString());

}

Here’s the implementation. Creating a shape at x/y with a certain radius is easy. I just need to be careful to calculate the vertexes in the order I expect in the tests. What’s a bit tricky is to vary x and y across the terrain on a level: where to start, how much distance between the center points?

public static IEnumerable<Shape> Enumerate_squares(int size, int r) {

for (var y = r; y < size; y += 2*r)

for (var x = r; x < size; x += 2*r) {

yield return new Shape {

Center = new Coordinate(y, x),

Vertexes = new[]{

new Coordinate(y-r, x-r), // north-west

new Coordinate(y-r, x+r), // north-east

new Coordinate(y+r, x+r), // south-east

new Coordinate(y+r, x-r) // south-west

}

};

}

}

But again with careful analysis of the specs it’s pretty straightforward ;-) And the same is true for diamonds, although they are generated with two sequential loops.

Integration

With all the details implemented and tested I can move on to integrate them. That’s the sole purpose of Interpolate(). It does not do anything on it’s own; it does not contain logic. It just pulls together all the parts and welds them together into a whole.

static void Interpolate(Terrain terrain, float offset, float amplitude, Func<float> randomValue)

{

var sh = new ShapeHierarchy(terrain.N);

var calc = new Calculator(offset, amplitude, randomValue);

foreach (var s in sh.Shapes) {

var vertex_values = s.Vertexes.Select(c => terrain[c.Y, c.X]);

var terrain_value = calc.Process(vertex_values.ToArray());

terrain[s.Center.Y, s.Center.X] = terrain_value;

}

}

That’s no rocket science. All shapes come in the right order from the ShapeHierarchy; their vertex values get collected from the Terrain; the center value is calculated from those values. That’s it. Very straightforward.

And it makes the original acceptance tests go green! Success! :-)

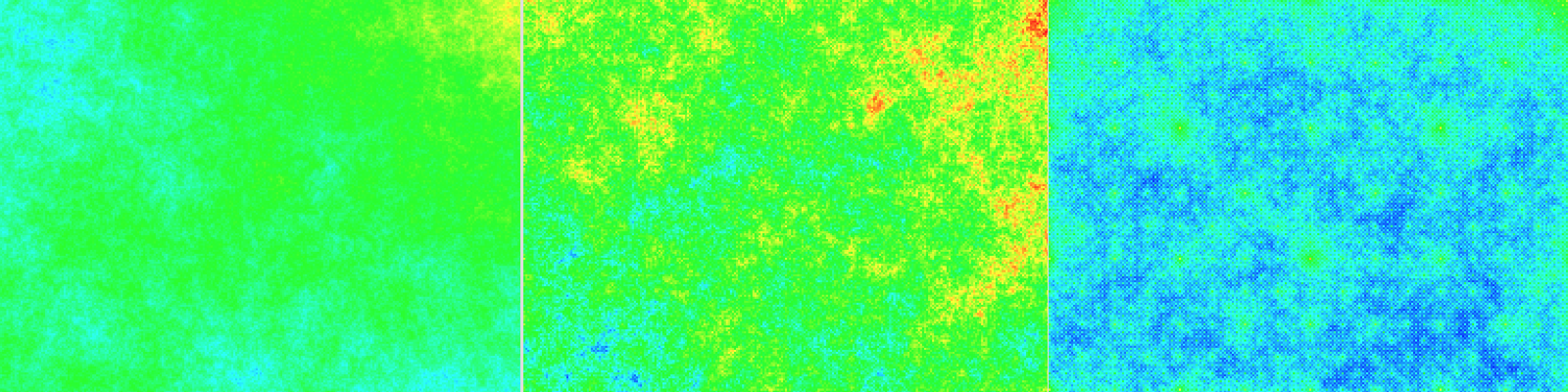

Manual acceptance tests

The automatic tests tell me the implementation is finished. Problem solved. But can I trust them? A green test of 25 floating point values is not very tangible. For me at least. Also the tests for the shape generation might be green – but what does that mean for realistically sized terrains? I’m unable to write tests for 1025×1025 terrains.

Enter the test station. Now I can run it to put terrain generation to a real test. Will I be visually pleased by the calculated terrains?

See for yourself:

I think this terrain (and others) is looking pretty good. And if not, I guess it’s more the fault of my kind of visualization than of the algorithm’s implementation.

Removing scaffolding tests

Since I did not progress according to the TDD steps but did explicit design there’s no need for refactoring. The solution is already in good, clean shape. At least to my taste.

Nevertheless I will do some clean up. I will remove tests.

Yes, I think there are too many tests. All tests of methods which should not be visible on the surface should be deleted. They become apparent when I set the visibility of methods like Enumerate_Squares() to private.

Tests working on those methods were useful while building the solution. Like a scaffold is useful while building a house. But once the house is finished, the scaffold is removed. That’s why I remove those tests. They would be white box tests and make the test suite brittle.

The only tests I keep are those of public methods. They are my bulwark against regressions should I need to change the codebase. That of course requires them to be comprehensive so that enough code paths are covered.

Summary

That completes my journey through the terrains of the Diamond-Square algorithm. It was fun. A different kind of problem than I usually tackle.

I hope you found it interesting to watch the solution unfold along a different path than TDD would suggest. TDD to me has value and it’s place – but I also think it needs to be padded by comprehensive analysis and explicit design. Neither code nor design should be a matter of surprise or afterthought. „Think before coding“ is the way I suggest for truly clean code.

- Part 1 – Analysis

- Part 2 – Design

- Part 3 – Implementation